|

News (not always updated)

|

Publications

|

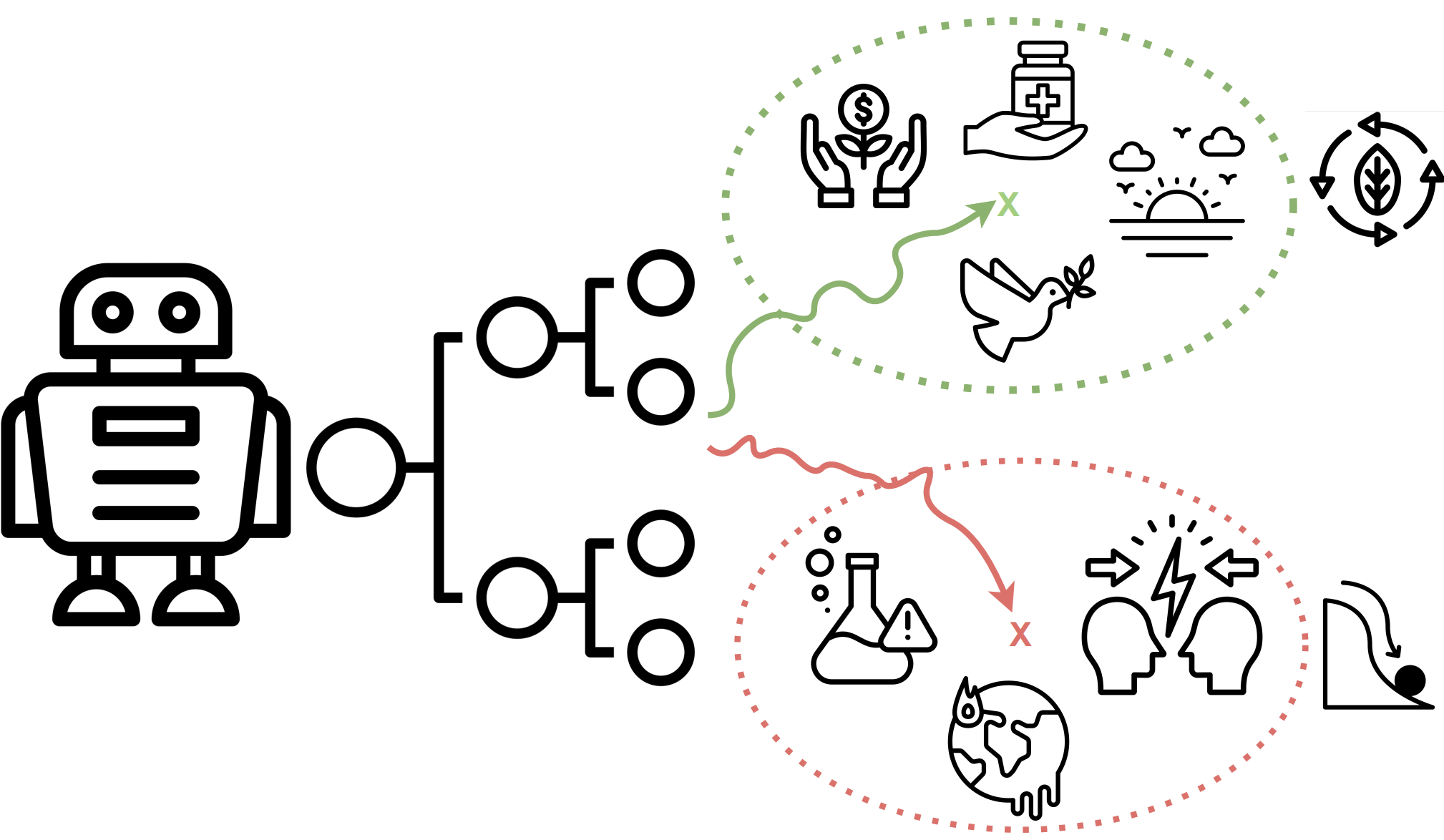

Safety is Essential for Responsible Open-Ended Systems

Ivaxi Sheth,

Jan Wehner,

Sahar Abdelnabi,

Ruta Binkyte

Mario Fritz.

Preprint

[

Paper, Tweet

]

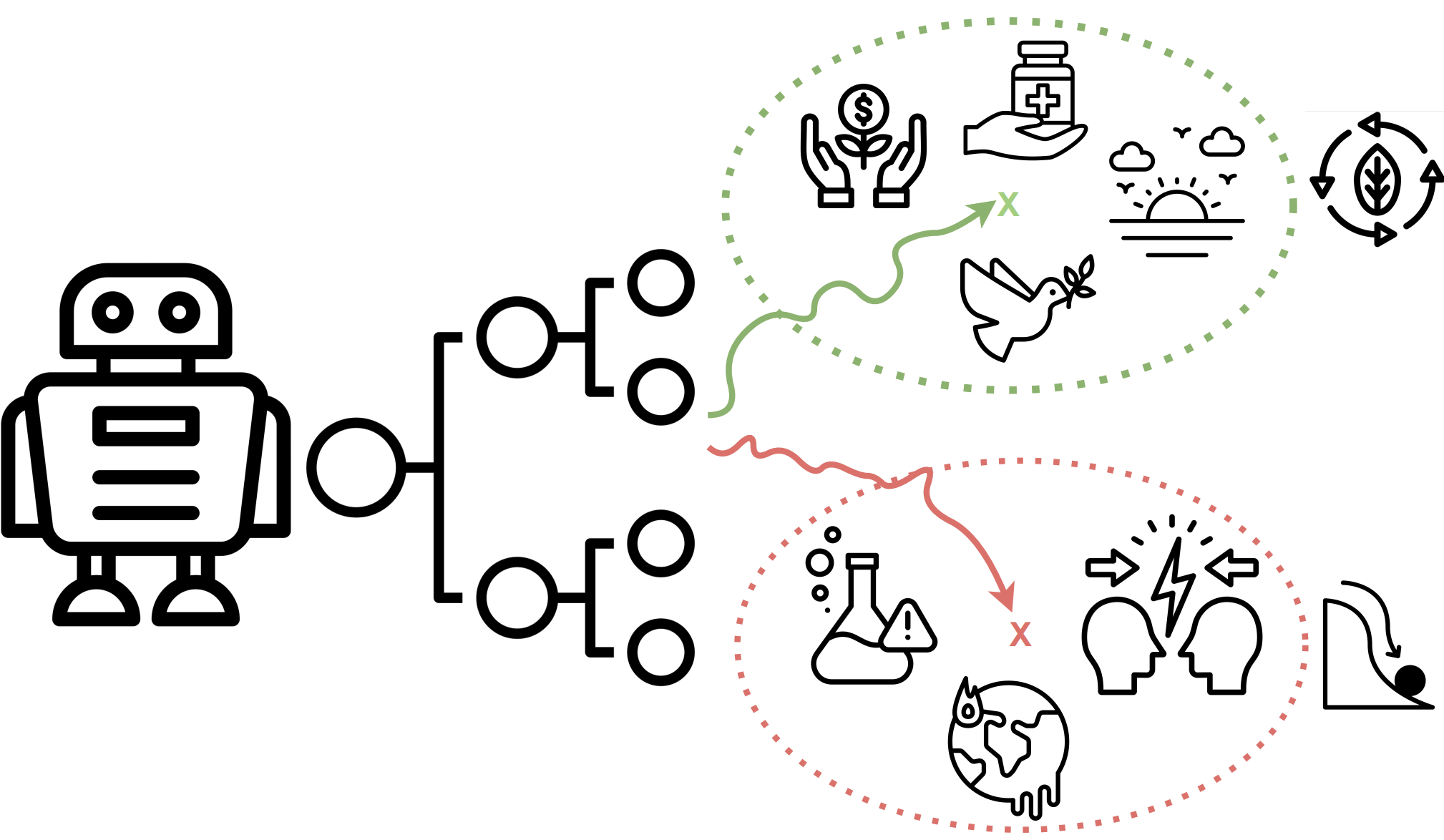

We argue that Open-Ended AI's inherently dynamic and self-propagating nature introduces significant, underexplored risks, including challenges in maintaining alignment, predictability, and control. This paper systematically examines these challenges, proposes mitigation strategies, and calls for action for different stakeholders to support the safe, responsible and successful development of Open-Ended AI.

|

|

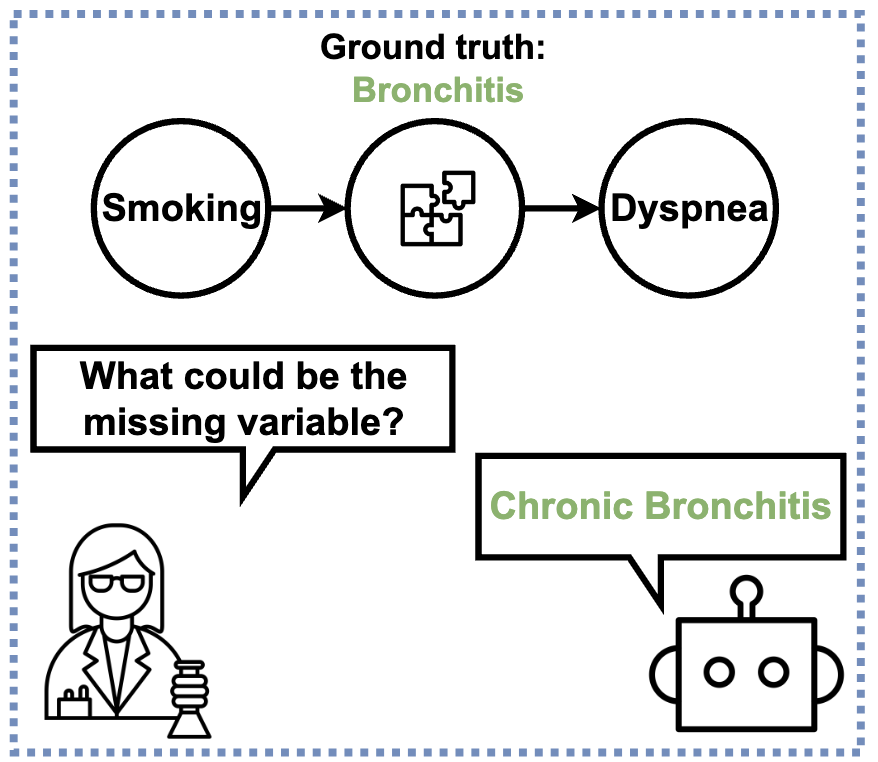

Hypothesizing Missing Causal Variables

Ivaxi Sheth,

Sahar Abdelnabi,

Mario Fritz.

CALM Workshop-Neurips 2024

[

Paper, Code

]

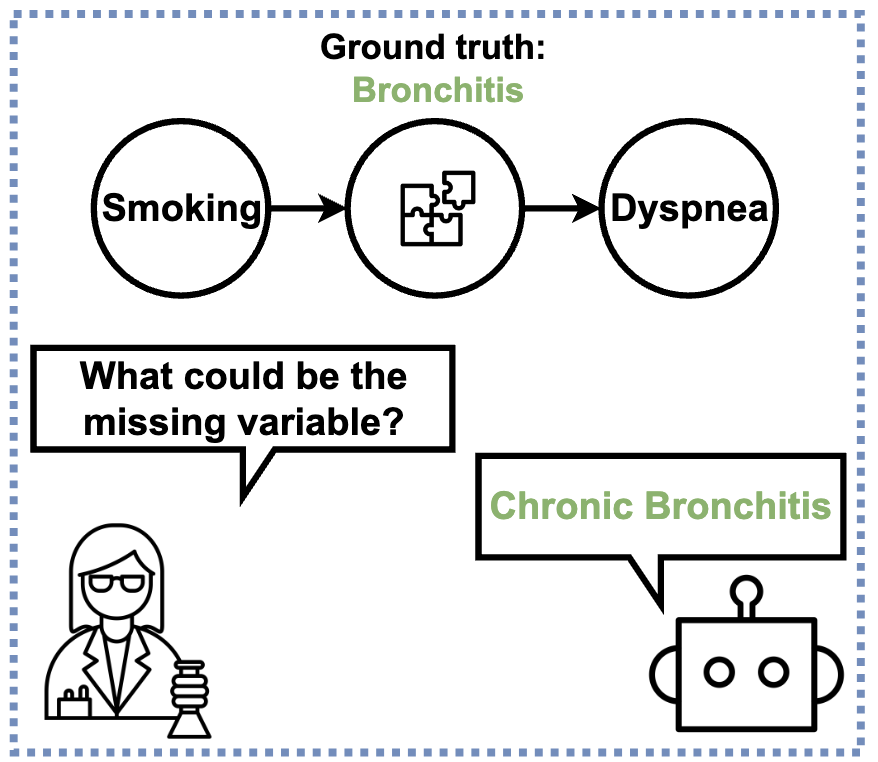

Can LLMs fill in the gaps of scientific discovery? This work challenges Large Language Models to complete partial causal graphs, revealing their surprising strengths—and limitations—in hypothesizing missing variables.

|

|

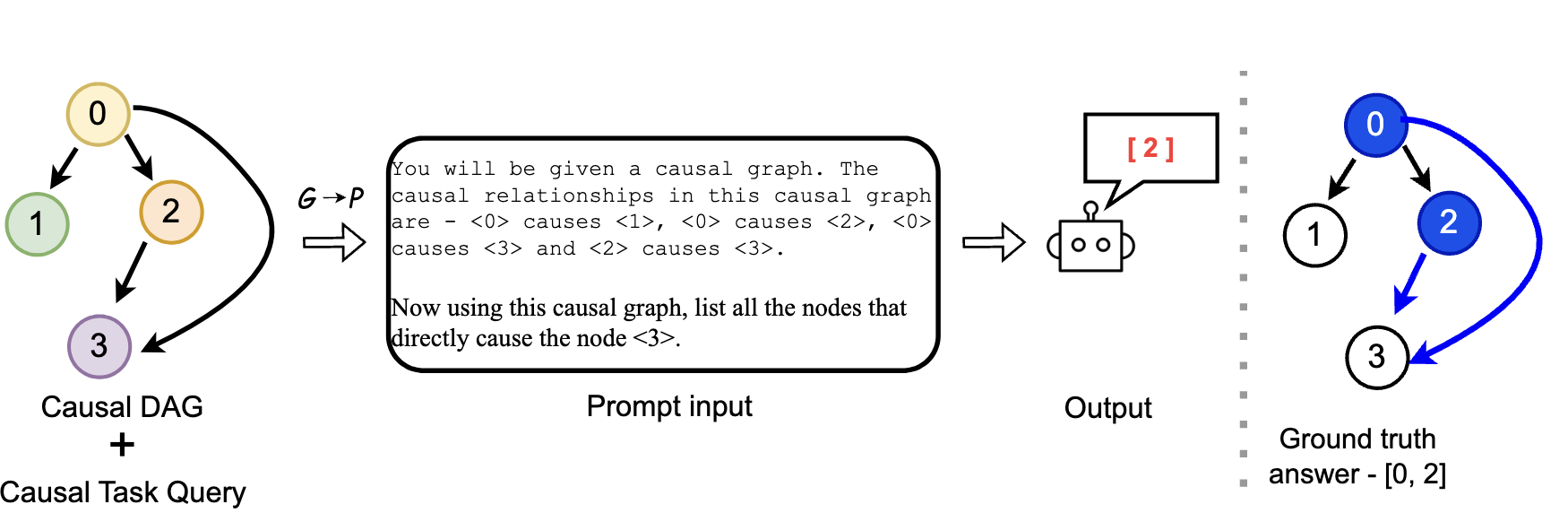

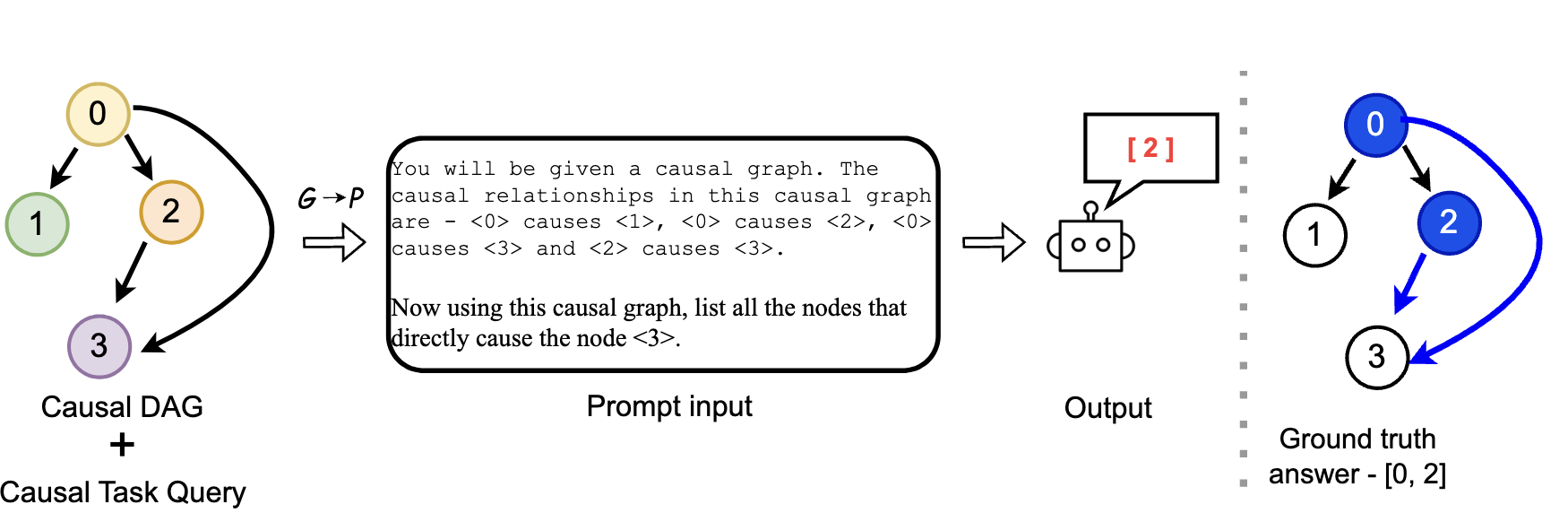

CausalGraph2LLM: Evaluating LLMs for Causal Queries

Ivaxi Sheth,

Bahare Fatemi,

Mario Fritz.

NAACL Findings 2025

[

Paper, Code

]

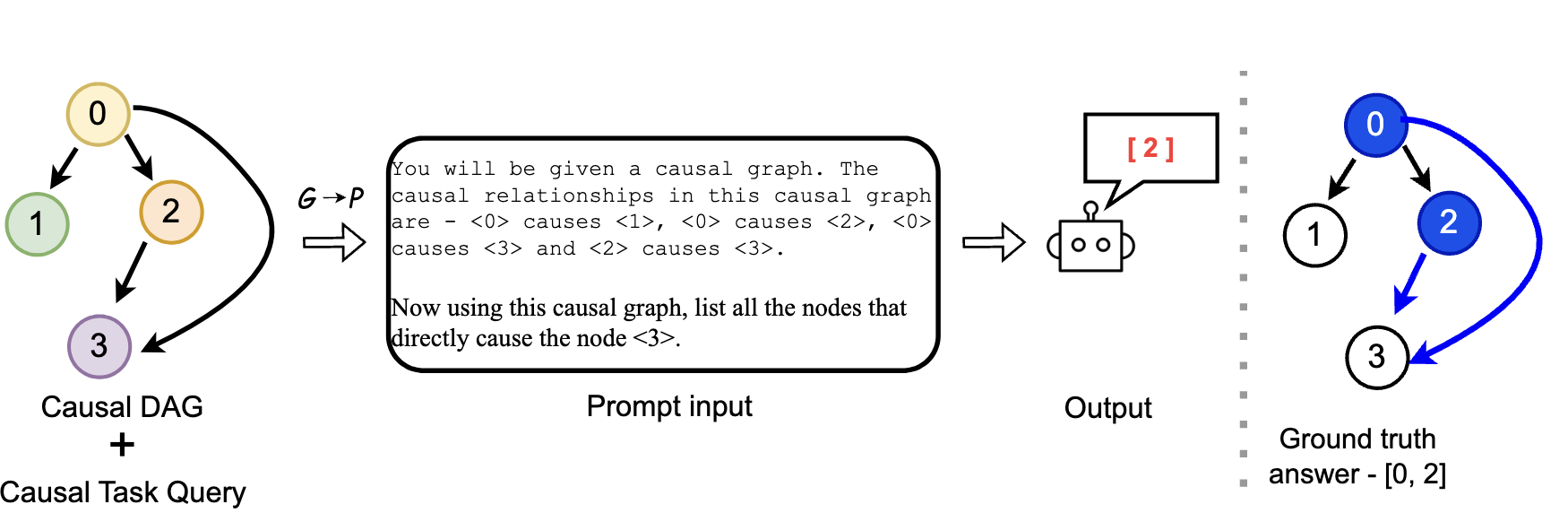

This paper introduces CausalGraph2LLM, the first comprehensive benchmark to evaluate Large Language Models' ability to understand and reason with causal graphs, revealing their sensitivity to encoding and potential biases in downstream tasks.

|

|

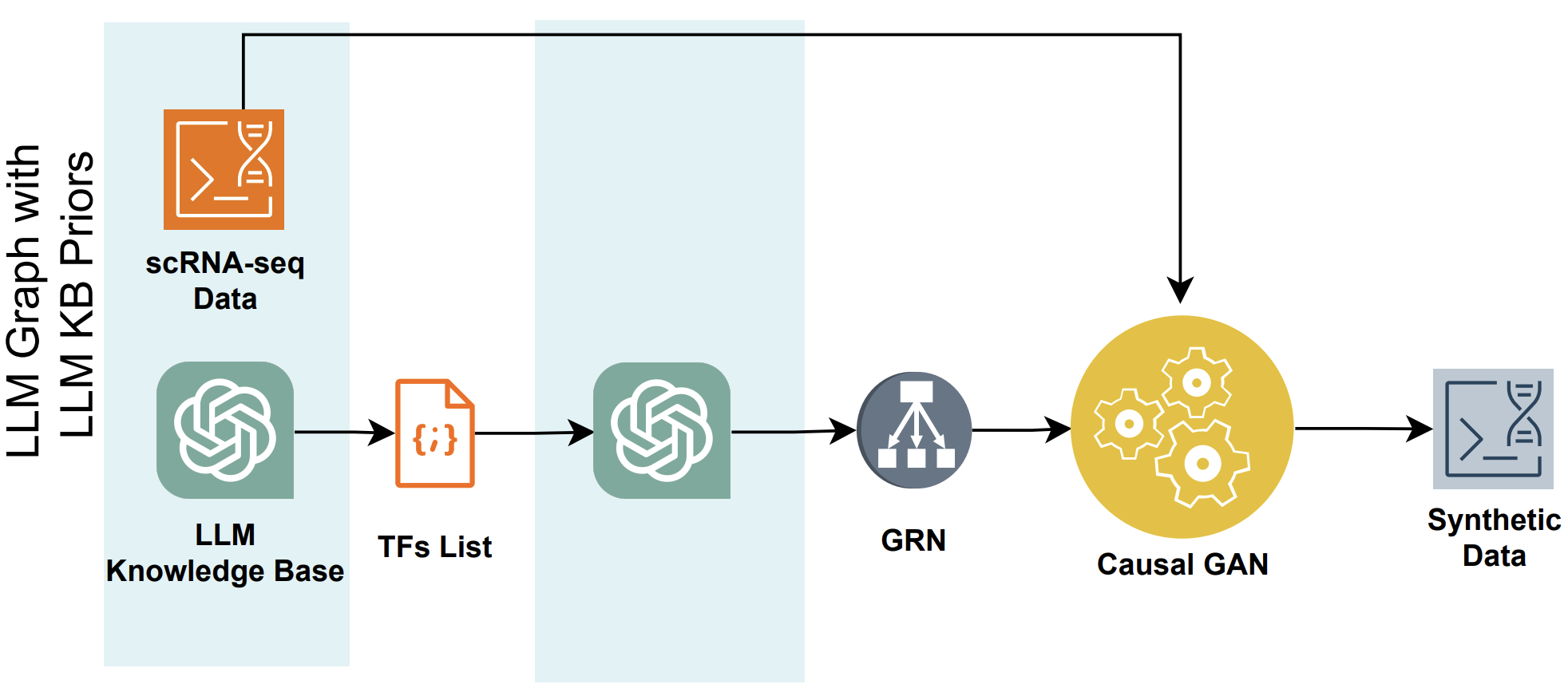

LLM4GRN: Discovering Causal Gene Regulatory Networks with LLMs -- Evaluation through Synthetic Data Generation

Tejumade Afonja*,

Ivaxi Sheth*,

Ruta Binkyte*,

Waqar Hanif, Thomas Ulas, Matthias Becker,

Mario Fritz.

Preprint

[

Paper, Code

]

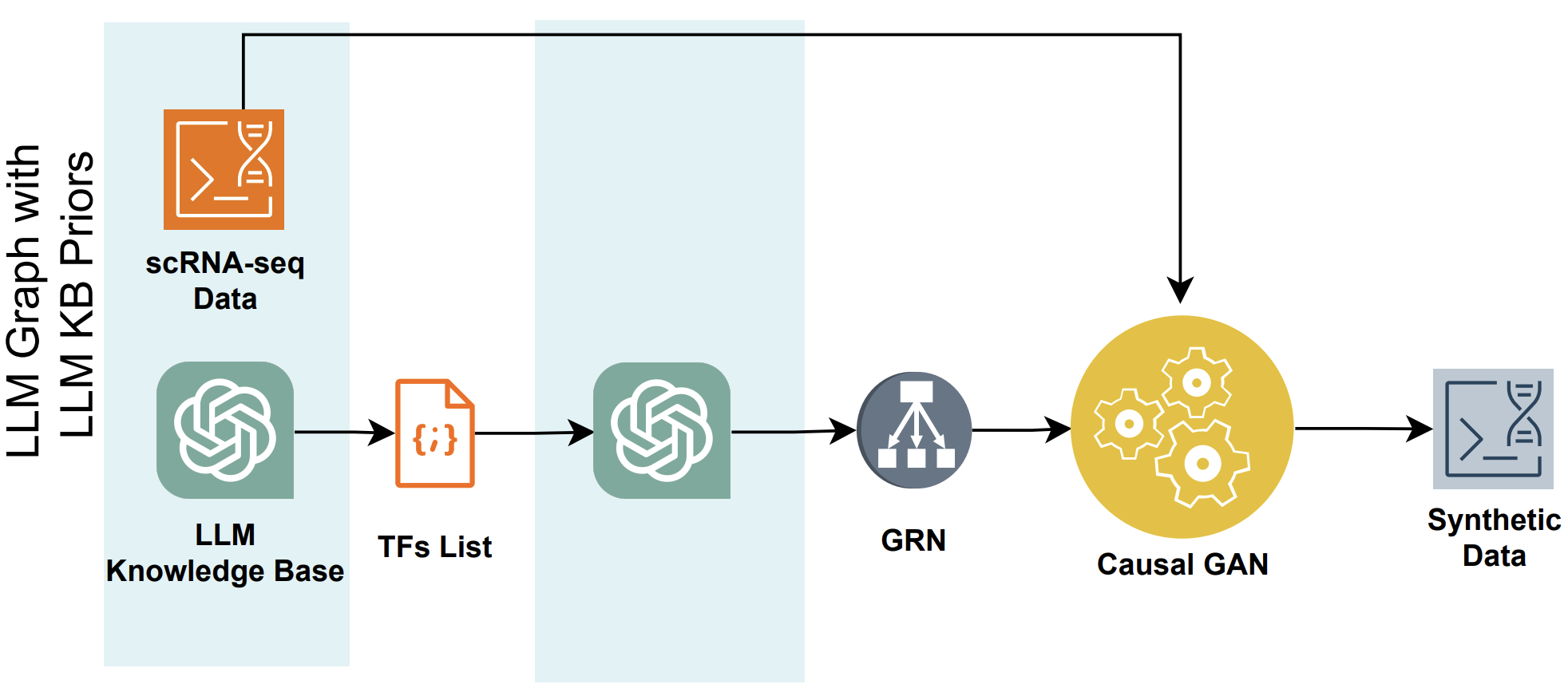

This paper explores the use of large language models (LLMs) for discovering gene regulatory networks (GRNs) from single-cell RNA sequencing data, demonstrating their effectiveness in guiding causal data generation and enhancing statistical modeling in biological research. |

|

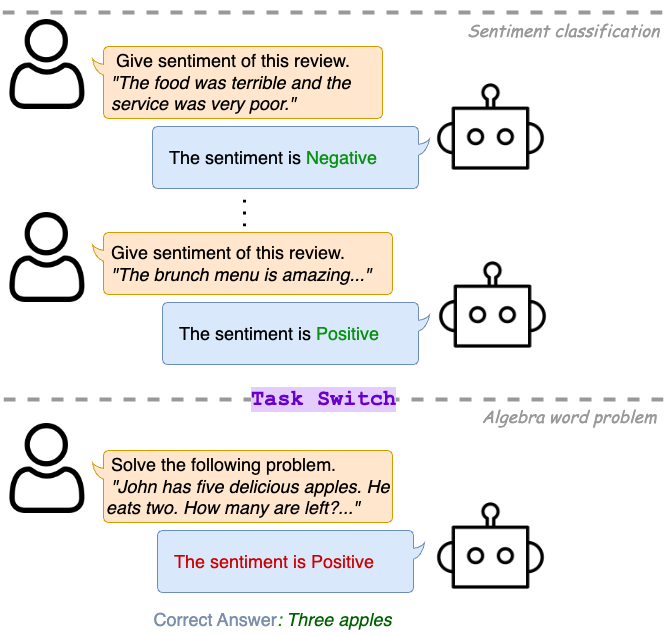

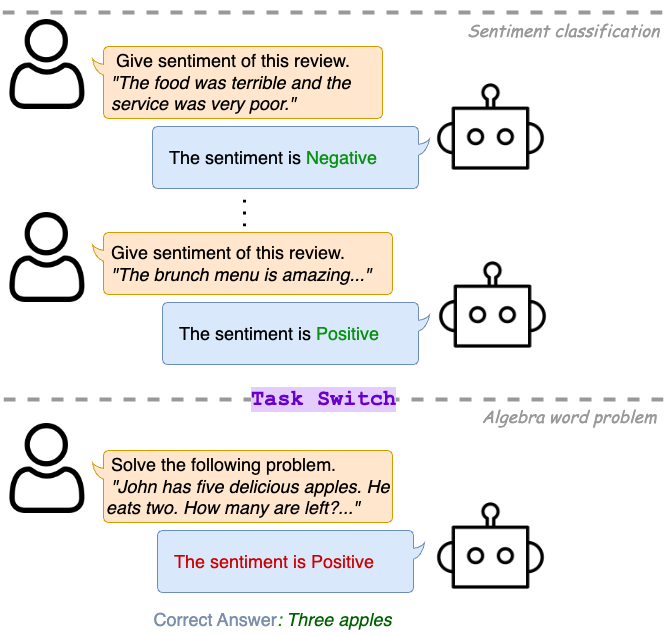

LLM Task Interference: An Initial Study on the Impact of Task-Switch in Conversational History

Akash Gupta*,

Ivaxi Sheth*,

Vyas Raina*,

Mark Gales,

Mario Fritz.

EMNLP Main 2024

ICML Foundation Models in Wild Workshop 2024

[

Paper, Code

]

Large Language Models (LLMs) can perform a wide range of tasks, but their performance can be negatively impacted when there's a switch in tasks. This study is the first to formalize the study of such vulnerabilities, revealing that both very large and small LLMs can be susceptible to performance degradation from task-switches.

|

|

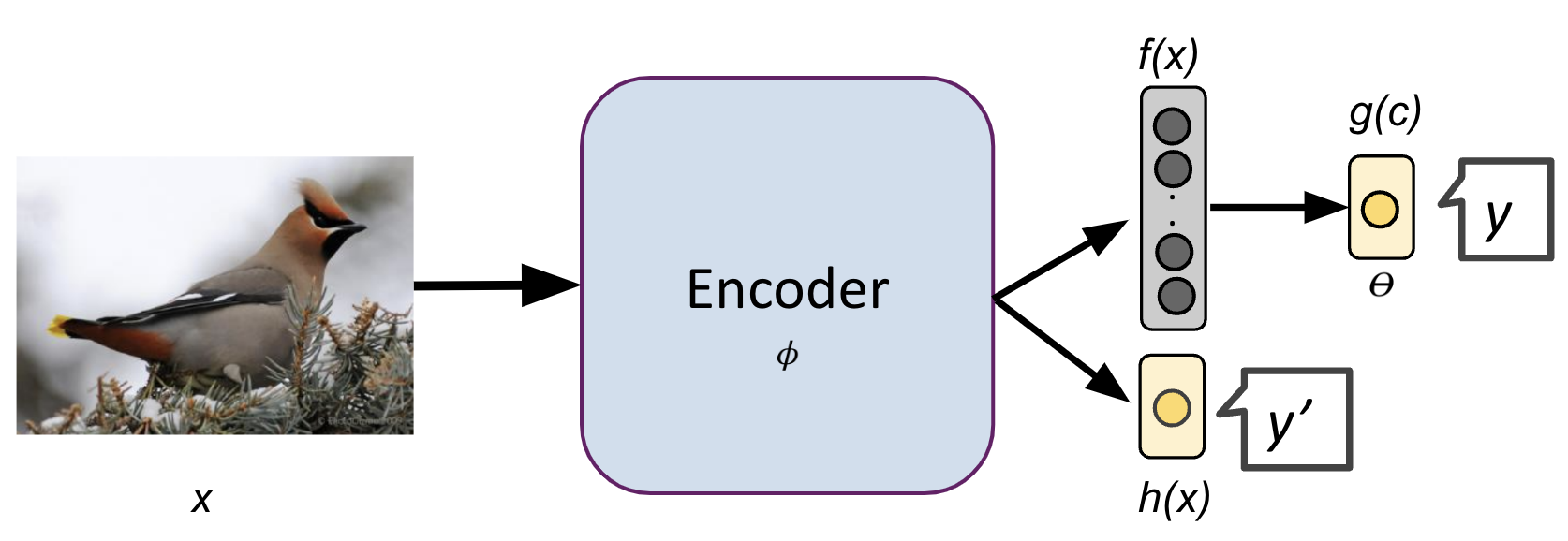

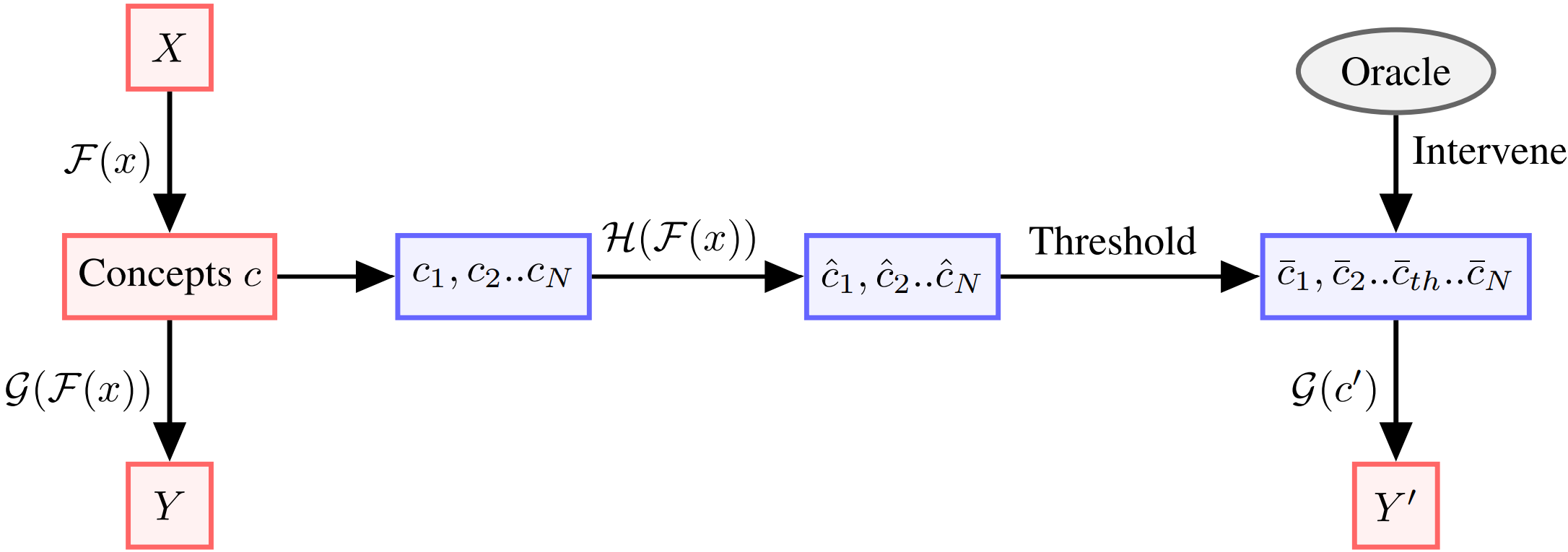

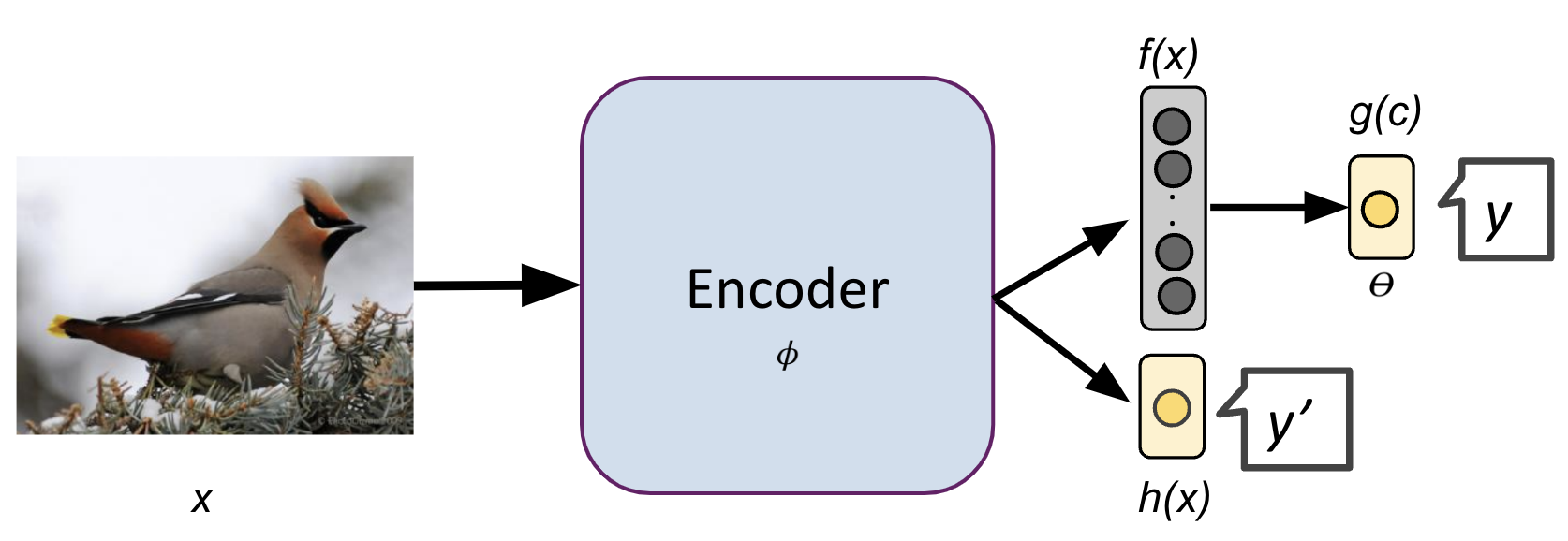

Auxiliary Losses for Learning Generalizable Concept-based Models

Ivaxi Sheth,

Samira Ebrahimi Kahou

NeurIPS 2023

[

Paper, Code

]

We proposed a multi-task learning paradigm for Concept Bottleneck Models to introduce inductive bias in concept learning. Our proposed model coop-CBM improves the downstream task accuracy over black box standard models. Using the concept orthogonal loss, we introduce orthogonality among concepts in the training of CBMs.

|

|

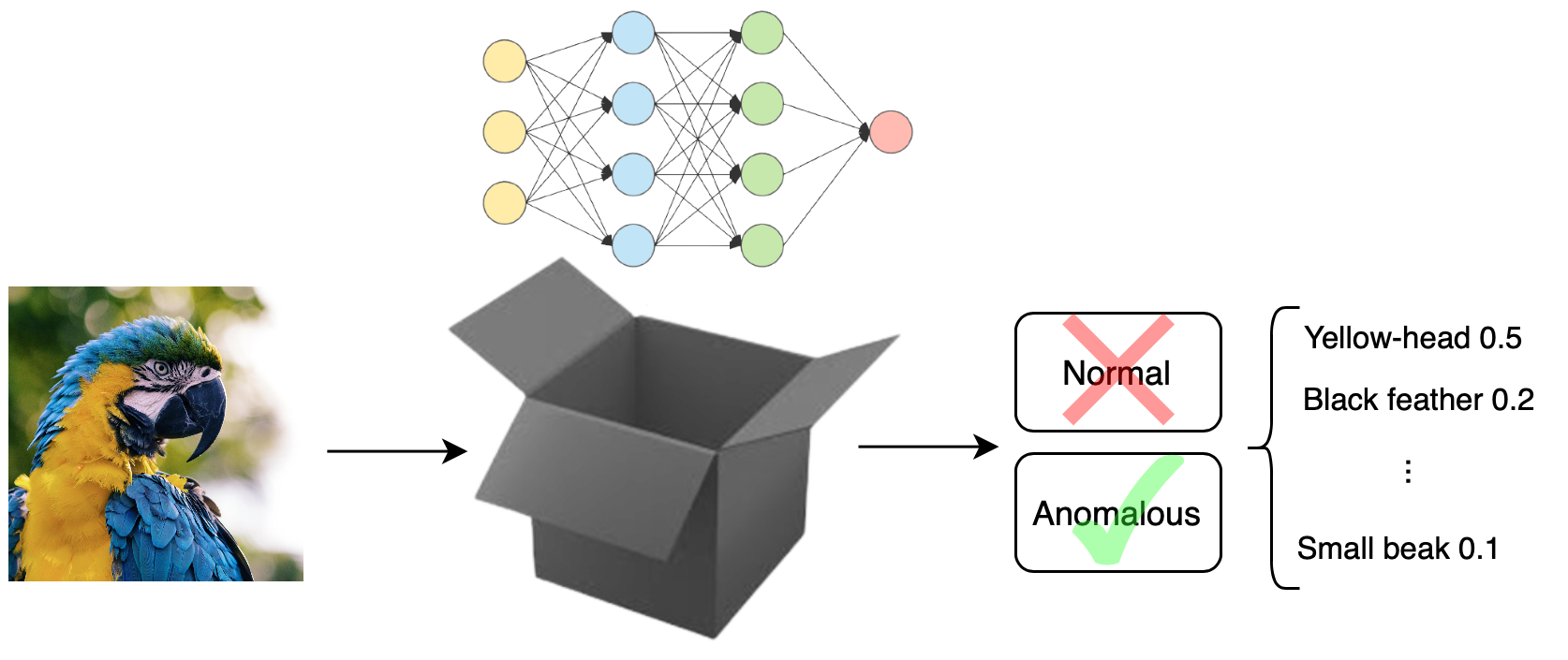

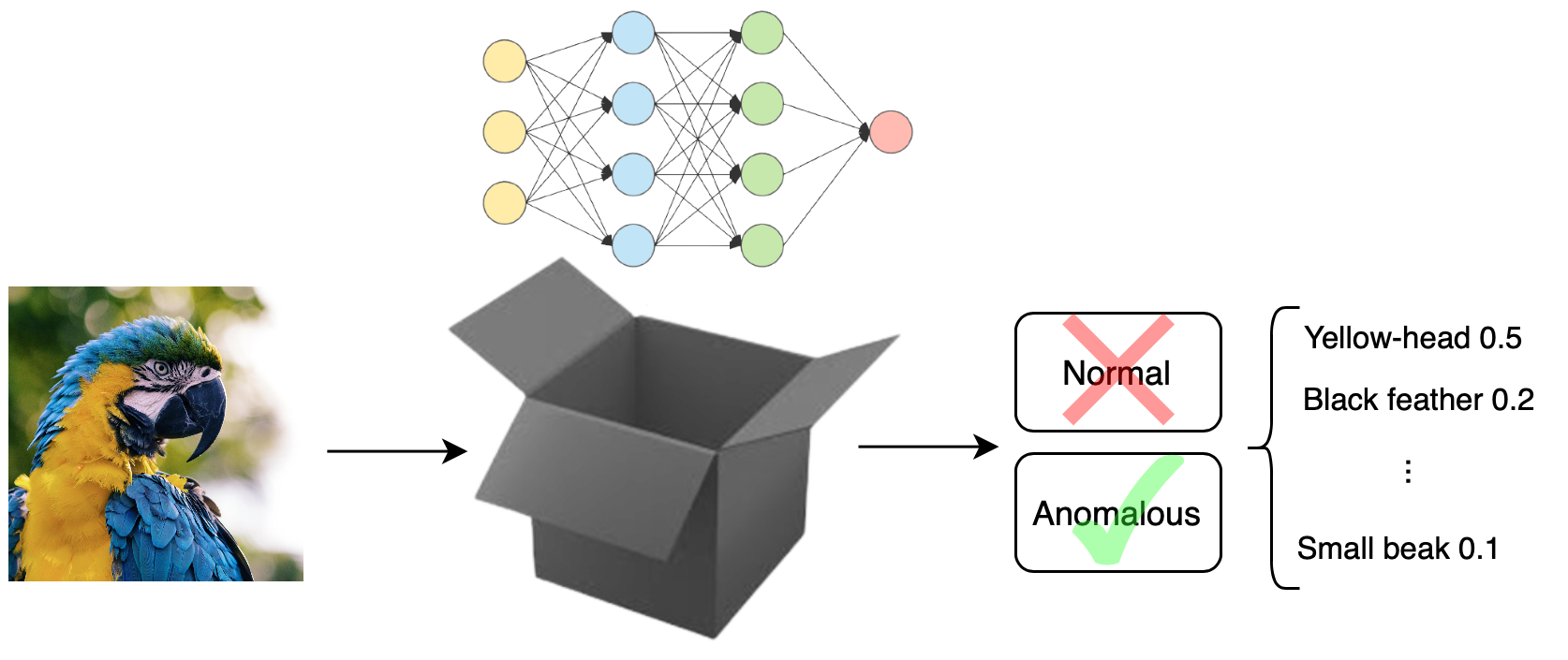

Transparent Anomaly Detection via Concept-based Explanations

Laya Rafiee Sevyeri*

Ivaxi Sheth*,

Farhood Farahnak*

Samira Ebrahimi Kahou

Shirin Abbasinejad Enger

NeurIPS 2023, XAI in Action

[

Paper

]

We propose Transparent {A}nomaly Detection {C}oncept {E}xplanations (ACE). ACE is able to provide human interpretable explanations in the form of concepts along with anomaly prediction. Our proposed model shows either higher or comparable results to black-box uninterpretable models.

|

|

Survey on AI Ethics: A Socio-technical Perspective

Dave Mbiazi*, Meghana Bhange*, Maryam Babaei*, Ivaxi Sheth*, Patrik Joslin Kenfack*,

Preprint

[

Paper

]

This work unifies the current and future ethical concerns of deploying AI into society.

|

|

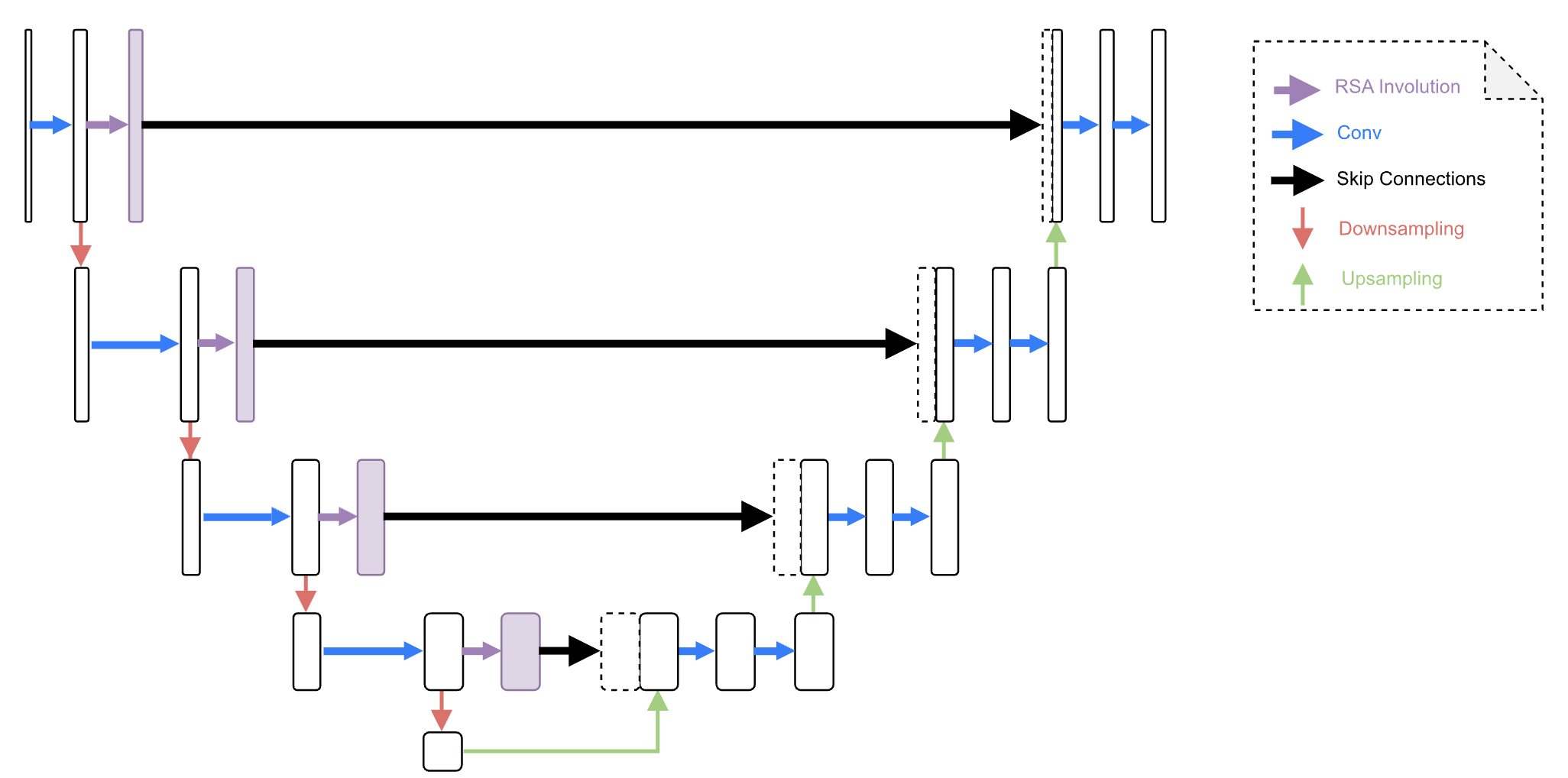

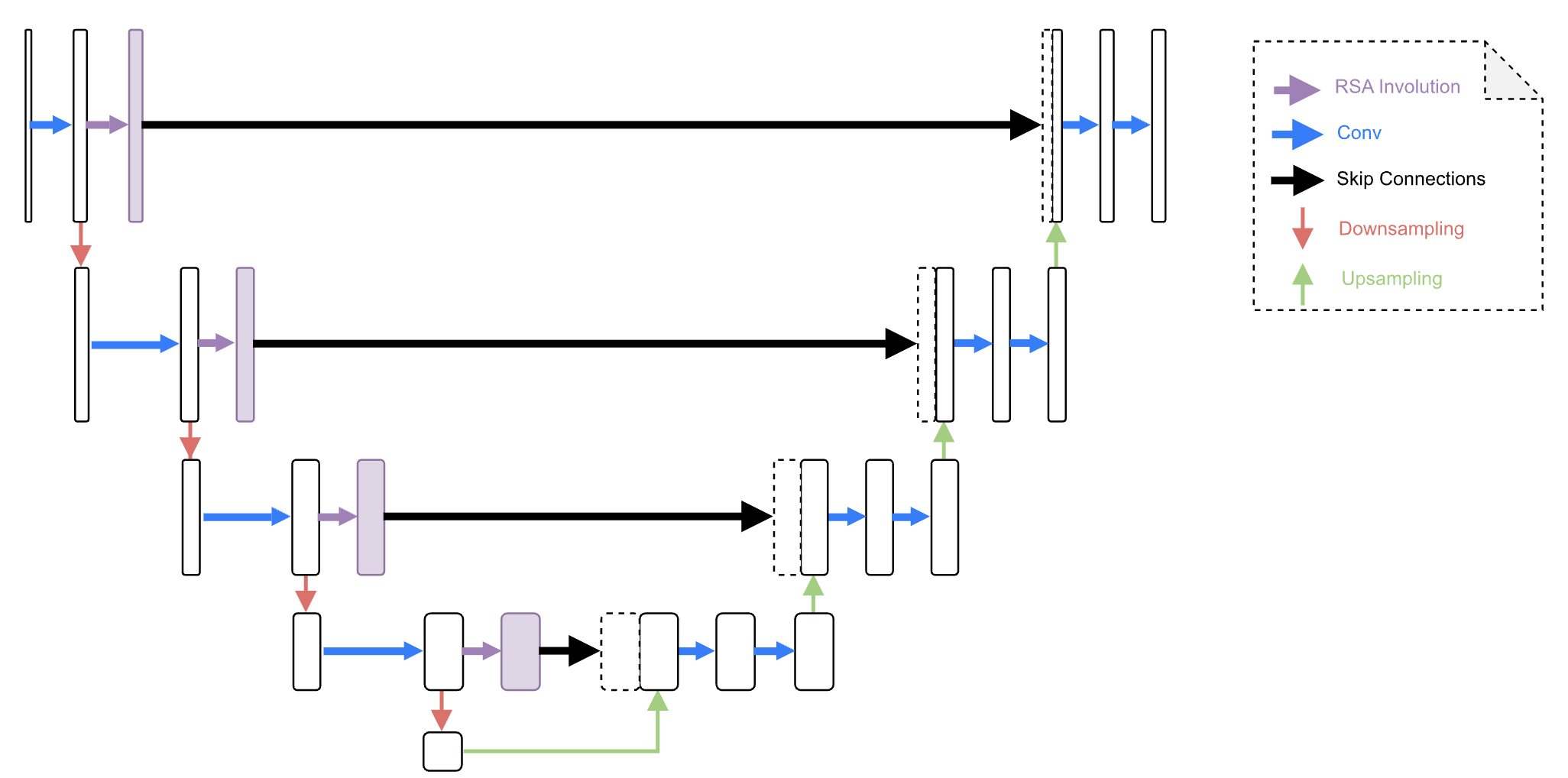

Relational UNet for Image Segmentation

Ivaxi Sheth*,

Pedro Braga*,

Shivakanth Sujit*,

Sahar Dastani,

Samira Ebrahimi Kahou

International Workshop on Machine Learning in Medical Imaging 2023

[

Paper , Code

]

We propose RelationalUNet which introduces relational feature transformation to the UNet architecture. RelationalUNet models the dynamics between visual and depth dimensions of a 3D medical image by introducing Relational Self-Attention blocks in skip connections. |

|

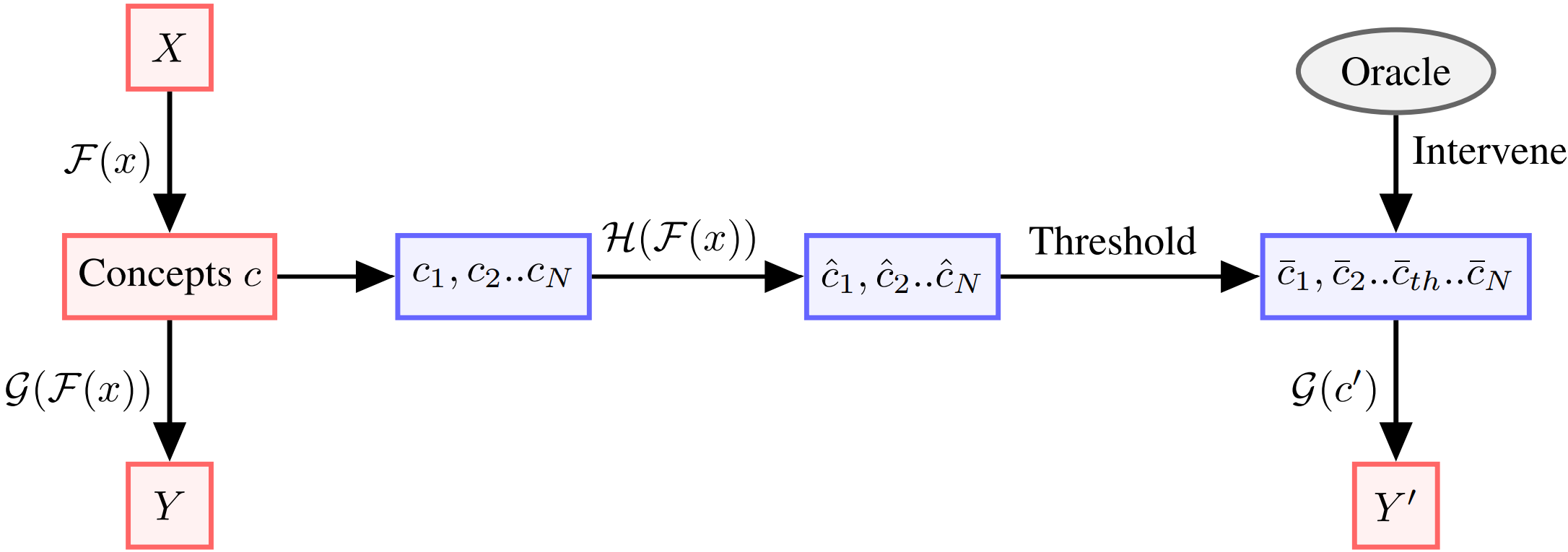

Learning from uncertain concepts via test time interventions

Ivaxi Sheth,

Aamer Abdul Rahman,

Laya Rafiee Sevyeri,

Mohammad Havaei

Samira Ebrahimi Kahou

NeurIPS 2022, Trustworthy and Socially Responsible Machine Learning Workshop

[

Paper

]

We propose uncertainty based strategy to select the interventions in Concept Bottleneck Models during inference.

|

FHIST: A Benchmark for Few-shot Classification of Histological Images

Fereshteh Shakeri,

Malik Boudiaf,

Sina Mohammadi,

Ivaxi Sheth,

Mohammad Havaei,

Ismail Ben Ayed

Samira Ebrahimi Kahou.

In submission

Our benchmark builds few-shot tasks and base-training data with various tissue types, different levels of domain shifts stemming from different cancer sites, and different class granularity levels, thereby reflecting realistic clinical settings. We evaluate the performances of state-of-the-art few-shot learning methods, initially designed for natural images, on our histology benchmark.

|

Three-stream network for enriched Action Recognition

Ivaxi Sheth,

CVPRW' 21

We propose three stream network with each stream working on a different frame rate of input for action recognition and detection. We test our work on popular datasets such as Kinetics, UCF-101 and AVA. The results on AVA dataset particularly shows that effectiveness of the use of attention for each stream.

|

Patents

Hardware implementation of windowed operations in three or more dimensions

Ivaxi Sheth,

Cagatay Dickici,

Aria Ahamdi,

James Imber.

|

System and method of performing convolution efficiently adapting winograd algorithm

Ferdinando Insalata, Cagatay Dikici, James Imber, Ivaxi Sheth

|

|

| |